Vectors

Table of Contents

Introduction

This post is part one of an experimental course in linear algebra. It will differ from the conventional treatment in a number of ways:

- in the use of geometric and graphical arguments throughout, in preference to proofs and especially to matrix-based derivations.

- in the use of “generalized inverses”, introduced initially to implement an analog of “division” for the dot and cross products.

- in the use of exterior algebra and wedge products, with goal of making the determinant, when it arrives, completely trivial.

- in the use of unconventional notations for the atomic operations out of which more complex operations (like matrix inversion) will be defined.

The level of technicality will be inconsistent. This is not intended to be pedagogical, but rather to develop the ideas which would be in a pedagogical treatment in a logical order, while justifying the various decisions and notations. These discussions will tend to be more technical, but after they are all stripped away, the ideal result would be a course which could plausibly be taught at a late-high-school level—a high bar, or a low one, I suppose.

We begin with the simplest case.

One Dimension

Vectors

Behold a vector:

![]()

For now I’ll notate this as

We can make a longer vector by doubling it:

This is the same as adding

We can easily imagine multiplying

There’s no reason

Then we can add and subtract these, which simply adds or subtracts their coefficients:

Therefore

We can divide by a constant, which just acts on the coefficient

We can even divide two vectors by each other, as long as the denominator isn’t zero:

If one is reversed, we get the negative:

(Note: this notion of dividing vectors is the first of many unconventional things in this post.)

The set of all of multiples of this single vector

This

Note we can also get the same vector space

We can see this construction as an operation "

And in fact, apparently, it hardly matters what the vectors in the space are multiples of—the important feature of this “vector space”

We can therefore “vectorize” any single object and get a vector space with the same structure as

Length

Now, what is the length of this arrow

Well, it’s

And any constant multiple

Maybe we think of

A vector, then, has no inherent length—not until we pick some reference vector to measure it with. Then its length carries “units” of that unit length. (The zero vector

Given our one-dimensional vector space

Multiplication

Above, we demonstrated the following operations:

- vector times scalar

, giving a vector. - vector divided by scalar, giving a vector.

- vector divided by (nonzero) vector, giving a scalar, as in

What’s missing is the multiplication of two vectors. The obvious definition would be:

but we get something that is neither a multiple of

This seems sensible enough, but we’ll have to see if it’s useful.

Two Dimensions

Vectors

So far our vectors aren’t very interesting: they act just like the real numbers

Now imagine we start with two objects. Unlike

We’ll notate these as, what else,

Now, clearly we could create a one-dimensional vector space out of either of these individually, with all of the properties defined above. The two spaces would be:

It’s easy to imagine the next step: we create a single space out of both vectors by considering any linear combination of the two, i.e.

Some elements of

Of course, we get a unique vector for each ordered pair

Components

If we have some arbitrary vector in our two-dimensional

Because no amount of

At this point all these arrows are cumbersome, so we’ll switch to a normal notation. Henceforth we write vectors as

We’ll write the projection of

Now, we originally constructed this vector space out of our two vectors

and the components of the same

Therefore the set of numbers describing the specific vector, such as

Any “basis” of

We will always think of a vector as being a fundamentally “geometric” object, rather than a “set of coordinates”—the coordinates are a description of the vector in a basis. A basis, then, can be thought of as a “lens” we can look at the space through. Many such lenses are possible, and not all see the space the same way, but the space also has inherent properties that do not depend on the lens we use.

Length

In one dimension we observed that our vectors had no “inherent” length. But we were able to define the length of a vector in “units” of some standard vector. Choosing

But we could just as easily choose

Clearly we could do the same for any individual direction in two dimensions.

Now, how should we define the length of a two-dimensional vector in general? The obvious answer is to assert that some pair of two basis vectors both have length “1”. The obvious choice are the two vectors we originally used to build the space

Once two unit vectors are chosen we can define a length by a standard Pythagorean theorem:

But, just as in 1D, we could as easily choose some other set of vectors to be the “unit” of length, such as

Therefore the “lengths” of vectors are only defined up to choice of basis. And, while it might seem like

If you define

The concept of length is not actually necessary to work with vectors at all. While all the vectors we’ll consider are naturally lengthed, it might make more sense to do without if considering, for example, a vector space of “apples” and “oranges”.

We will again aim to view our vectors as “geometric” or “graphical” objects, and therefore will not consider any vector to have an inherent length, except in view of a particular set of reference vectors—just as we did not consider vectors to have inherent coordiantes, except wth respect to a basis.

The operation of “length” then can be seen as an act of “measurement” with respect to a set of unit-length reference vectors. This choice of reference vectors we call a choosing a “metric”. Just like choosing a “basis”, the choice of “metric” acts like a lens through which the vector space can be viewed and described. In the simplest cases, the chosen basis consists of unit-length vectors, which is the case when we use

Often we simplify the whole description by considering bases comprised of perpendicular and unit-length vectors, i.e., “orthonormal bases”. Then the lengths of all vectors can be equally well measured in any orthonormal basis using a Pythagorean theorem.

We will often write the length of vector

And in the

For the rest of this post we’ll stick to a standard metric which takes the two vectors

Projection and Rejection

We’ve been writing the same vector

We’ll now start writing this decompositions generically, in terms of subscripted constants

Clearly

We can generalize “the component along…” to any pair of vectors. For

Here we are making a distinction between the object named "

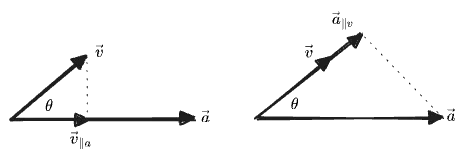

Graphically, the vector projections are:

In each case we find a projection by “dropping a line” from the end of one vector until it meets the line of the other vector at a right angle. Note that

If we know the angle

The terms “projection” and “component” are mostly interchangeable. We will typically use “component” when referring to a “projection on a basis vector”, whereas “projection” is an operation between any two vectors. One also sees the notations

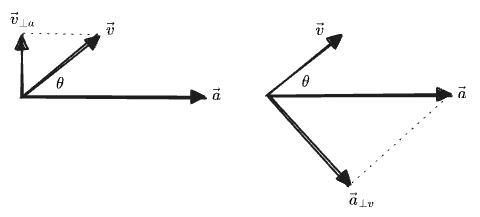

When the vector

Given the projection

The

The

The definition of “rejection” is just “whatever’s left after projection”:

Graphically:

We can also define a scalar rejection, in terms of the angles shown in the graphic:

Note that again the scalar rejection is not simply the length of the vector rejection, because it can be negative. Instead we have, using

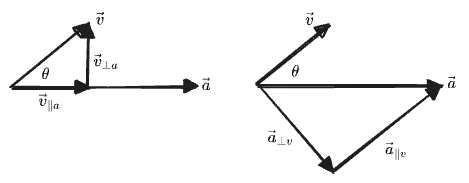

Here we can see the projection and rejection together:

In each case the projection and rejection together are two sides of a triangle, and their sum gives the original vector. Therefore we can write a Pythagorean theorem relating their lengths:

We will be able to give more useful definitions for the projection and rejection, not making use of

-

Note that my notation uses

-

But… what if we allowed the

Comments

(via Bluesky)